Python Threading, Semaphores, and Barriers

1. Introduction to Python Threading

Picture a bustling kitchen during the dinner rush. Chefs, sous chefs, and kitchen staff all work simultaneously, each focused on their tasks but coordinating their efforts to create a seamless dining experience. This orchestrated chaos is not unlike Python threading, where multiple tasks run concurrently within a single program.

Threading in Python allows developers to write programs that can juggle multiple operations at once, much like the kitchen analogy I gave before. It's a way to make your code more efficient, responsive, and capable of handling complex tasks without chaos and anxiety.

Let's break it down with a simple example:

Check the output here

Starting to prepare Pasta

Starting to prepare Salad

Pasta is ready!

Salad is ready!

All dishes are prepared!

In this snippet, we've created two threads that simulate chefs preparing different dishes. The magic happens when both threads start – they run simultaneously, mimicking two chefs working side by side in the kitchen.

Threading shines in scenarios where you're dealing with I/O-bound tasks, like reading files or making network requests. It allows your program to continue working while waiting for these potentially slow operations to complete.

However, it's not all sunshine and rainbows. 😦 Threading comes with its own set of challenges:

- Race conditions: When threads access shared resources, unexpected results can occur if not managed properly.

- Deadlocks: Threads can get stuck waiting for each other, like two polite people insisting the other go first through a doorway. (BTW I have wrote an article about deadlock in python here)

- Complexity: Debugging threaded applications can be tricky, as the order of execution isn't always predictable.

As we venture deeper into the world of Python threading, we'll explore tools like semaphores and barriers that help manage these challenges. These synchronization primitives act like the head chef in our kitchen, ensuring that all the moving parts work together harmoniously.

Threading is a powerful tool in a developer's toolkit, but it requires careful handling. In the following sections, I will explain semaphores and barriers below, so you never question what they are for and how to use them 😎. So get ready – we're about to cook up some serious code, hehe..!

2. Understanding Semaphores in Python

Imagine a busy coffee shop with a limited number of espresso machines. Baristas need to coordinate their use to avoid chaos and ensure smooth operation. This scenario perfectly illustrates the concept of semaphores in Python threading.

Semaphores are like the shift manager at our imaginary coffee shop, controlling access to shared resources. They act as counters, allowing a set number of threads to access a resource simultaneously. When a thread wants to use a resource, it asks the semaphore for permission. If the semaphore's count is greater than zero, the thread is allowed access, and the count decreases. When the thread is done, it notifies the semaphore, and the count increases.

Let's break this down with a Python example:

Check the output here

Alice is using the espresso machine

Bob is using the espresso machine

Bob is done making coffee

Alice is done making coffee

David is using the espresso machine

Charlie is using the espresso machine

David is done making coffee

Charlie is done making coffee

All coffee orders are complete!

In this example, we've created a semaphore that allows two threads (baristas) to use the espresso machines simultaneously. The with statement ensures that the semaphore is properly released even if an exception occurs, to avoid deadlock.

Semaphores come in two flavors:

- Counting Semaphores: These allow a specified number of threads to access a resource, like in our coffee shop example.

- Binary Semaphores: These are essentially locks, allowing only one thread at a time.

Semaphores are particularly useful when you need to:

- Limit access to a fixed number of resources

- Control the order of thread execution

- Implement producer-consumer scenarios

However, they're not without potential pitfalls:

- Deadlocks can occur if not used carefully

- Overuse can lead to reduced parallelism

- They don't protect against all types of race conditions

As you work with semaphores, you'll find they're a powerful tool for managing concurrency. They allow you to create more complex and efficient threaded applications, much like how a well-organized coffee shop can serve more customers with limited resources.

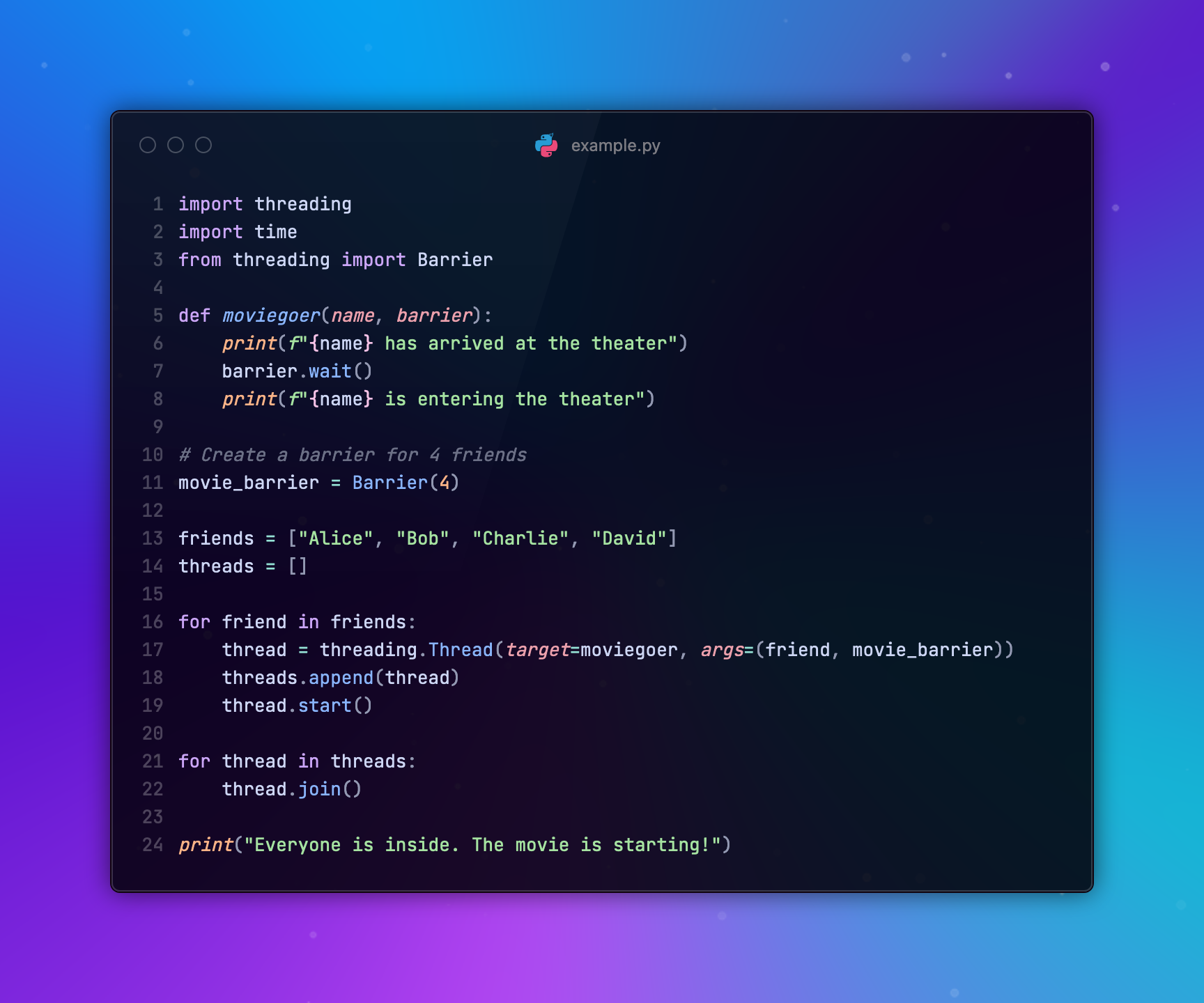

3. Barriers: Synchronizing Multiple Threads

Imagine a group of friends planning to meet at a movie theater. They've agreed that no one enters until everyone arrives. This scenario perfectly captures the essence of barriers in Python threading.

Barriers act as synchronization points in multithreaded programs, ensuring that a group of threads reach a certain point before any of them can proceed. It's like a virtual meeting spot where threads wait for their peers before moving forward together.

Let's break this down with a Python example:

Check the output here

Alice has arrived at the theater

Bob has arrived at the theaterCharlie has arrived at the theater

David has arrived at the theater

David is entering the theaterAlice is entering the theater

Bob is entering the theater

Charlie is entering the theater

Everyone is inside. The movie is starting!

In this example, we've created a barrier for four friends. Each thread (representing a friend) calls barrier.wait() when they arrive. The barrier blocks until all four friends have called wait(). Once the last friend arrives, the barrier releases all threads simultaneously.

Barriers are particularly useful when:

- You need to synchronize multiple threads at specific points in your program

- You're dealing with phased operations where all threads must complete one phase before moving to the next

- You want to ensure that data is fully prepared before processing begins

Some key points to remember about barriers:

- They're reusable: After all threads are released, the barrier resets and can be used again.

- They can have a timeout: You can set a maximum wait time to prevent indefinite blocking.

- They can execute an action when the barrier is full: This is useful for setup or cleanup operations.

Here's an extended example showcasing these features:

Check the output hereCheck the output here

Worker-2 finished phase 0

Worker-0 finished phase 0

Worker-1 finished phase 0

All threads reached the barrier. Starting next phase.

Worker-2 finished phase 1

Worker-1 finished phase 1

Worker-0 finished phase 1

All threads reached the barrier. Starting next phase.

Worker-0 finished phase 2

Worker-2 finished phase 2

Worker-1 finished phase 2

All threads reached the barrier. Starting next phase.

All work completed

This example demonstrates a multi-phase operation where threads synchronize at the end of each phase. The barrier executes a function when full and has a timeout to prevent indefinite waiting.

While barriers are powerful, they're not without potential issues:

- They can lead to deadlocks if not all threads reach the barrier

- Overuse can reduce parallelism and overall performance

- They may mask logical errors in your program flow

Barriers provide a good way to coordinate multiple threads so you can create more structured and predictable multithreaded applications. As you see explore more threading scenarios, you'll find barriers to be an important key tool to fight the chaos 🥷

4. Implementing Semaphores and Barriers

Now that you got the concepts of semaphores and barriers, let's roll up our sleeves and see how we can implement these synchronization tools in real-world scenarios. Think of this as moving from theory to practice - like transitioning from reading a cookbook to actually cooking in the kitchen.

Let's start with a practical example that combines both semaphores and barriers. Imagine we're simulating a multi-stage rocket launch, where different systems need to be checked and synchronized before liftoff.

Check the output here

Starting system check: Propulsion

Starting system check: Navigation

Starting system check: Life Support

System check complete: Life Support

Life Support waiting for other systems

Starting system check: Communication

System check complete: Navigation

Navigation waiting for other systems

System check complete: Propulsion

Propulsion waiting for other systems

System check complete: Communication

Communication waiting for other systems

Communication ready for launch!Life Support ready for launch!

Propulsion ready for launch!

Navigation ready for launch!

All systems go! Initiating launch sequence.

In this rocket launch simulation:

- We use a semaphore to limit the number of concurrent system checks to 3. This might represent a limitation in the number of technicians or diagnostic tools available.

- The barrier ensures that all four main systems complete their checks before the launch sequence can begin.

- Each system check takes a random amount of time, simulating real-world variability.

This example showcases how semaphores and barriers can work together to create a more complex and realistic synchronization scenario.

💡 When implementing your next rocket launcher project with semaphores and barriers, keep these tips in mind:

- Always release semaphores: Use context managers (

withstatements) or try/finally blocks to ensure semaphores are released. - Handle potential exceptions: Barrier's

wait()method can raise aBrokenBarrierErrorif the barrier is reset or times out. - Consider using timeouts: Both semaphores and barriers support timeouts to prevent indefinite waiting.

- Be mindful of the thread count: Ensure the number of threads matches the barrier count to avoid deadlocks.

6. Performance Considerations

When working with Python threading, semaphores, and barriers, performance is like a delicate balancing act. It's not just about making your code run faster, but about making it run smarter. Let's dive into some key performance considerations that can help you fine-tune your multithreaded applications.

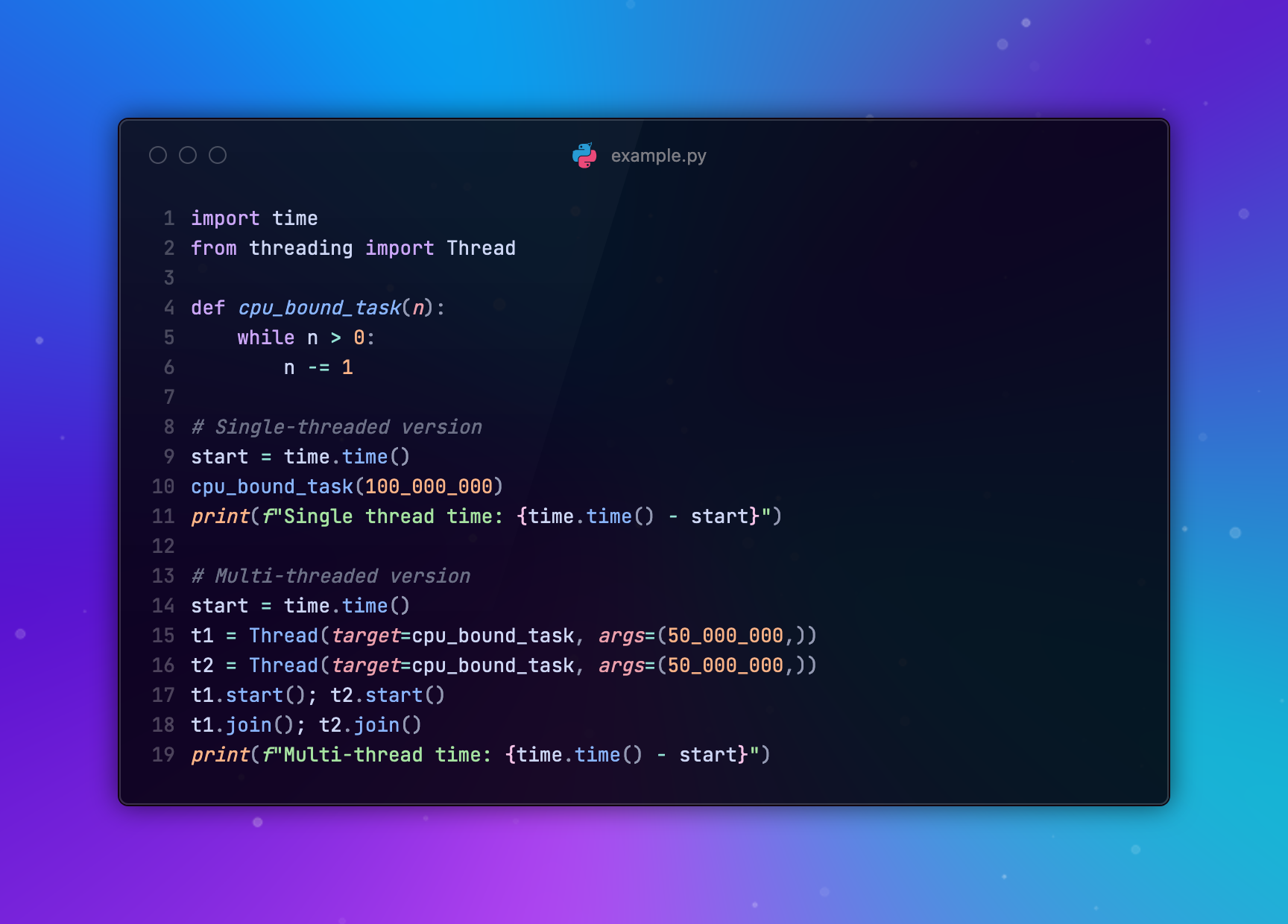

The Global Interpreter Lock (GIL)

First things first, let's address the elephant in the room: Python's Global Interpreter Lock (GIL). The GIL is like a traffic controller that only allows one thread to execute Python bytecode at a time. This means that for CPU-bound tasks, threading might not give you the performance boost you're expecting.

Check the output here

Single thread time: 1.9691720008850098

Multi-thread time: 1.9881441593170166

Running this code, you might be surprised to find that the multi-threaded version doesn't perform significantly better, and might even be slower due to the overhead of thread management.

I/O Bound is Where Threading Shines

For I/O-bound tasks, however, threading can significantly improve performance. While one thread is waiting for I/O, others can execute, making efficient use of time.

Check the output here

Sequential time: 23.489413738250732

Parallel time: 2.472808837890625

This example demonstrates how threading can dramatically speed up I/O-bound operations.

7. The end

So now you know the most important tools to tame python threading and improve performance of your backend server or whatever you use it for 🥸. If you read till this point, I just want to thank you and wish you the best day ever ;) . Stay cool, stay positive and pay attention to semaphores on the roads too!

Happy coding!